Vision and Challenges for a World with AGI

Jan 1, 2022 posted by Franklin Ren, largely revised on Oct 28, 2023

If you've ever watched the movie “The Matrix”, you should be familiar with the iconic choice between the blue pill and the red pill. If you haven't watched it yet, here is a short explanation: In this situation, the main character Neo is offered a choice between a blue pill and a red pill. The blue pill represents staying in his comfort zone and continuing to live a normal but illusory life, while the red pill symbolizes confronting a harsh reality full of uncertainties, but also ripe with opportunities. This analogy has been widely adopted by various science fiction narratives to illustrate a fundamental dilemma regarding the future paths of the human race. The question posed is this: Is it preferable for humanity to live in a way where everyone is satisfied and happy, as depicted in works like "Brave New World," or should humans strive to push the boundaries of their technologies, potentially enabling a civilization that spans the universe, despite the immense pains and sacrifices required to achieve this? This debate has persisted for many years, but recently, the progress of artificial general intelligence (AGI) has introduced a third path for humanity to consider.

How can a powerful AGI be beneficial, if we can create one? The answer lies in one of the fundamental capabilities of AGI: its ability to continually evolve and upgrade itself by acquiring new knowledge. This means it could address problems that are challenging for humans, doing so more creatively, efficiently, and with fewer resources. Consider Elon Musk's aspiration to establish cities on Mars. To realize this, humanity would need to invest enormous sums of money into spacecraft development, which could potentially reduce the quality of everyone's life on Earth. Even right now, many have already started criticizing Musk's demanding approach to his workforce, although he asserts this intensity is necessary to achieve such ambitious goals. Now imagine, if we have an AGI potent enough to take on much of this challenging work and provide actionable insights into the most effective methods of spacecraft development - we might be able to save 90% of the time and money traditionally required for space exploration! Another great example is the fusion power. The entire human race has been on this for almost a century, spending trillions of dollars, yet progress remains very marginal in this promising next-generation energy source. What if AGI could illuminate a path to achieve fusion power, even providing a detailed formula? This breakthrough would chart a course for humanity's future, meanwhile reducing the sacrifices of our quality of life in the path of pursuing it to the minimum amount.

Recently, there has been a third argument pointing out that AGI is not only a nice-to-have feature for the scientific progress of the human species, but a must-have thing instead. This argument is based on a plain observation - the duration required to acquire foundational knowledge for pursuing cutting-edge scientific research has become longer and longer. If you were someone smart enough in the Medieval era, you would be able to finish learning all mathematical progress by that time before the age of 20. And if you were even smarter, you could invent something new before 25. Sir Isaac Newton invented differentiation and integration at the age of 22 - an age when even the smartest brain of our time could not finish learning all the mathematical prerequisites to conduct independent research. That is why certain mathematicians are pessimistic about whether humans could eventually prove or disprove Riemann's Hypothesis - it might be out of reach within a smart brain's short lifespan. If that is true, our choices are either to delve into human genetic modifications (an even more risky path for the human species which I don't want to discuss in this passage), or, we invest in AGI to solve those problems.

The vision of AGI could also be much closer to our daily life. In the future with AGI, most of the “boring” work - painting walls, writing email replies, blah, blah, blah - could all be done by the AI. This will fundamentally free people from the work they don't like and allow them to pursue their most enjoyable lifestyle. Many believe that if AI could lift the aggregated productivity of humans to that degree, a UBI (universal basic income) would be viable. Then, people won't worry about their livelihood even if they don't do any work, or do something that society doesn't need that much but is enjoyable (e.g., music, dance, sports). Of course, if people still want to do some work, they could definitely be allowed to, but for purely self-achievement purposes. In fact, there are already some places on the earth that are halfway there to this kind of lifestyle - especially in Nordic countries like Norway - where the resources are abundant and the productivity is high. An AGI powerful enough might bring the entire world into this. Of course, we don't have to worry that scientific and technological development will slow down - AGI will be powerful enough to help us with that.

However, in the path to achieving a future that seems to be so great, there are some serious challenges. I am not talking about the technical difficulty here - in fact, that might be the least to worry about if we have enough time. I am also not talking about that AI might treat humans as their enemies and try to destroy them (like those machines in the Matrix movie). No, no, no. There is something more dangerous than that. The real problem we are facing is not what a powerful AI can do, but who is controlling that powerful AI.

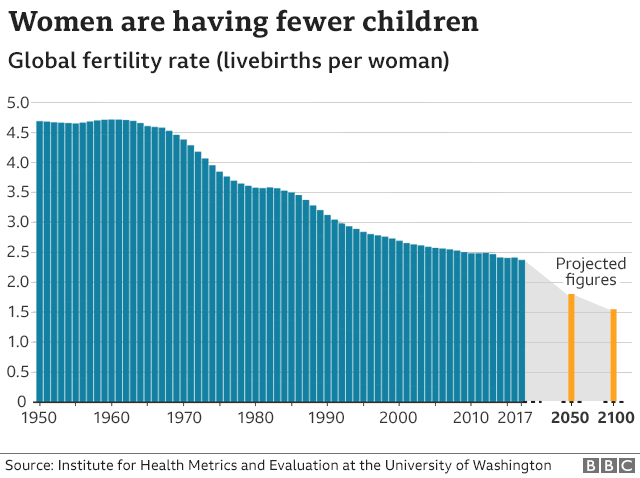

Let's simply imagine what will happen in the path of developing a powerful AGI. In the first stage, different specialized AIs could supersede humans in many jobs - from truck driving in the mid-west to market analysis on Wall Street - but no single AI could produce all types of products and services humans need, which means the exchange and trade of good and services is still a required process. That is a less concerning stage. We need consumers with purchasing power to buy those products and services produced by AI, otherwise, whoever owns those AI will never be able to make money and buy stuff they cannot produce. At this stage, the government has the incentive to provide benefits to people whose jobs are endangered or replaced by AI, it could be something like UBI, or some new jobs directly provided by the government. We have seen those kinds of policies being introduced many times when people's jobs are lost, from the Franklin Roosevelt era to today's social democratic countries in Europe. We are already doing it, there is no reason we will stop doing it again. You might ask will this force people to move to jobs they don't like? Maybe not. If some jobs are hated by people so much, fewer people will flow into them and escalate their salaries - making them more likely to be automated by AI. Some even argue that the loss of jobs will not be a problem since we already have a decreasing birth rate and a decreasing projected population. What is really concerning, is the stage that comes after.

In the second stage, most jobs will be replaced by AI, and a single powerful AGI could produce all types of products and services humans need, but it cannot make automated high-level decisions yet - there are still some humans controlling that AI! This will be the most dangerous stage for humans on the path to AGI. Since people controlling that powerful AI (maybe the governments, maybe the multi-national corporations) could produce whatever goods and services they want, they need nothing from the rest of us. That pretty much means whoever controls that AI could do whatever they want, including leaving a bare minimum amount of livelihood to the rest of us, taking away our education so that we could never compete with them, or even worse, simply killing us all. When you see multi-national pharmaceutical corporations buying politicians and allowing drugs to be flooded on the streets, when you see bureaucrats in some countries stealing all international aid and letting the people starve, when you see dictators costing millions of lives on the battlefield simply for their ambition, it won't be surprising if people owning the unchecked AGI power become as crazy as those guys. Some Cyberpunk science fiction suggests that the oppressed might still have a way to fight through, but I would say most of them are too optimistic. If an AI is that powerful, it could control all military devices and make it almost impossible for humans to battle with it and its masters. In my opinion, two novels successfully describe what might happen in this kind of extreme case: “The Wages of Humanity” by Cixin Liu and “From the New World” by Yusuke Kishi. Not going to sell books here - but if you read them, you will have an idea of how bad this situation could be.

Will there be a third stage, when AGI become powerful enough to make high-level decision, for example, an AGI president? No one knows. Most AI skeptics focus on the risk at this stage, pointing out AI might treat humans as their enemies and try to destroy them. I would argue that there is a possibility, but no one could know for sure. On the other hand, there is an even more probable risk that stays highly neglected - what if those in charge purposely slow down the progress of AI development, to keep it at a level that maximizes their interest, instead of the benefit of the entire human race? Emperor Qian Long in ancient China knew about the French Revolution, knew the steam engines, but he chose to hide those ideas and technologies from the people so that they wouldn't cause any instability to his reign. Maybe we will never enter the third stage, maybe we don't have to enter the third stage to suffer from the trouble AI brings to us.

So, what is the takeaway? Maybe we need to realize the main concern about a world with AGI is not about what it could do, but who is controlling that AGI. In recent years the term “AI democracy” has gotten people's attention. The idea is that a powerful AI should not be owned by a particular company or country, but by the entire human race. The development of open-source AI platforms kind of helped with that. Nowadays, anyone can download the recent BERT or LLAMA model from GitHub or Hugging Face and use it to make some powerful products. However, will this last long? The most powerful large language models like GPT or Claude require millions of dollars to train - a number that is out of reach for most people. And when the training is finished, it is natural that their owners would like to keep it close-sourced to ensure their profitability. What about those small-sized, decentralized AIs? They haven't proven themselves to be as powerful as the large, centralized models. I don't think AI democracy is necessarily a myth, but it is obvious that we still have a long way to go to achieve it.

There are many other AGI pitfalls, of course. One significant one I want to bring about is that a powerful AGI could potentially indulge people, making them too lazy to think or do anything. While there are examples like Norway where people live comfortably without necessarily having to work yet still choose to engage and innovate, there are contrasting scenarios, like Nauru's. Nauru experienced a rapid economic ascent due to its rich phosphate reserves. However, as these reserves dwindled, the country grappled with economic diversification challenges. This serves as a cautionary tale of becoming overly reliant on a singular resource. Is this sharp disparity a result of different levels of education? Maybe. But solely increasing the levels of education may not be enough. Recently as ChatGPT become increasingly popular, many teachers have already noticed that their students are getting lazier in thinking and learning fundamental knowledge. Without sufficient fundamental knowledge, how can one possibly innovate, whether inside the AI field or not? Perhaps there doesn't have to be some particular nasty guy to purposely slow down the AI progress, the underlying laziness inside our human nature itself will do it. Perhaps what stops the human species from evolving into a civilization that spans the universe is not we can't build a powerful AGI, but that we are too lazy to build one. There are too many things that could be a pitfall for us, and we have barely started to watch our steps.